This is our contribution to the Revision 2015 8K intro competition, where it placed 2nd.

Its a remake of Second Reality, the famous demo by Future Crew, in 8192 bytes . (No, 8K has nothing to do with the resolution)

You can download the final version with more transitions but no voices, including low-res version and 12K versions with higher-quality soundtrack: 8K Reality by Fulcrum (final)

If you prefer to watch it on Youtube: https://www.youtube.com/watch?v=lI-yGc6Ixr0

Feedback welcome on Pouet: http://www.pouet.net/prod.php?which=65412

And now for the making-of, which is an expanded and illustrated version of the readme:

This is Seven at the keys, explaining a bit about the code side of things. El Blanco has written a very detailed account of the music orchestration side at http://elblancosdigitaldreams.blogspot.com/2015/04/getting-real-pt-1-are-we-crazy.html

I had the idea to make a size-limited version of Second Reality for a long time, but 4K is obviously too limited. Las had been bugging me at GhettoScene 2014 to make an 8K for Revision. When Second Reality was 20 years old, Future Crew open-sourced the code and the music under an unlicense (do whatever you want). While the code was of little use (indexed mode tricks, Finnish comments,…), the permissive license for the music meant we could adhere to the GEMA-rules at Revision. Since I had no other ideas, I gently tested the willingness of our musicians to try something this crazy. El Blanco volunteered to give it a shot.

Luckily, he was busy selling a house, and I had forgotten to mention to him that Clinkster came with a set of example instruments, so his progress was slow. Luckily because, if he had immediately delivered the first cut of the soundtrack, I’d have said: “SEVEN KILOBYTES? Let’s forget this and focus on finishing our demo for Revision.” Instead, I started working on minimalistic versions of various scenes, trying to get an idea how much space the shaders would need. I hoped to have about 3 KB shaders, 1 KB images, 0.5 KB init code, which would leave 3.5 KB for the music. We decided from the start not to do the endscroller, and the end credits could be dropped if needed. We knew we would exceed the 8 minute time limit from the Revision comporules, but having seen in the past how the organisers go out of their way to make things work (the Limp Ninja “our entry broke the beamer” stunt comes to mind), I hoped they would be flexible.

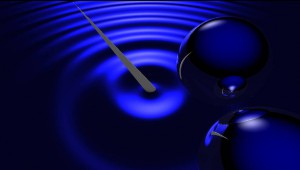

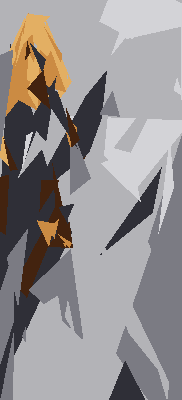

Besides the music, which is the part of Second Reality that has aged the best and that we knew we had to get right, there was also the issue of the graphics. Second Reality has a number of showcase graphics that we couldn’t just drop. Some of those were trivial to create with a raytracer/marcher, such as the spheres over water and the hexagonal nuts at the end. But the title screen, the mountain troll, the demon face and the amazon-on-icebear posed a problem. Since they are not nearly as important as the music, I decided to use a random generation approach inspired by the fantastic Mona 256 byte intro, but with triangles instead of lines.

I wrote a generator that compared the original image with a set of randomly-generated triangles colored with a palette of 4-8 handpicked colors. I had hoped about 100 triangles per image would give something recognizable, which was the bar we hoped to pass. 4 images, with 100 2-byte random seeds plus palettes and generator code would fit nicely in 1KB. The results were mixed, images with big areas of similar color were OK, like the demon face, but the others looked like abstract art at best. Much experimentation with triangle size and color strategies ensued.

Meanwhile, work on the demo was halted completely, as my brain couldn’t stop focusing on the intro. More scenes were converted to shaders with varying levels of authenticity. Using the Windows Speech API to add the “Get down”, “I am not an atomic playboy” and “10 seconds to transmission” samples was surprisingly trivial, and took only 210 bytes (default female voice only). I wasn’t sure about the final size of any of the code/music/images, and anything that went over its size budget could end the production. So feelings fluctuated constantly between “Hey, we can do this if we try hard enough” and “it’ll never work and we’re crazy for even trying”, occasionally veering into “this idea is so obvious that someone else will also try it at NVscene/Tokyo Demofest/Revision” paranoia. El Blanco checked in longer and longer versions of the music, doing his best to imitate the sound of the original to a T. Skaven made instruments hop around channels like crazy, intentionally using the side-effects to shape the sound. And while Purple Motions composing was much closer to the modern 1 instrument 1 track norm, he used untuned samples so converting those to Clinkster was time-consuming. I had a bad feeling about the speed with which the music grew (500 bytes for 20 extra seconds), but I rationalized that by now, he must have created all instruments and anything new would be note data only.

Can you guess what this is? If you answered “A armored-bikini-clad amazon with a sword on an icebear” you’ve probably seen Second Reality before. It looks stretched because the original effect used a 320×400 resolution

10 days before the party, the music was ready. Together with the extremely minimal shaders (for example, the hexahedrons on the chessboard just rotated, without bouncing, swaying, appearing or disappearing), it clocked in at 12 KB. We clearly had a problem, and since there wasn’t enough time to finish the demo (which has lots of missing art, and a busy graphician), we had to fix it (hard), release it in the 64K compo (disappointing) or release nothing at all (even more disappointing).

I ditched the random-generator approach to the images, since Crinkler actually increased the size of the random seeds to 110-115 % during compression. In contrast, shaders compress to 20-40% of their original size, and having more shaders benefit the compression ratio of all shaders. I also started reading the Clinkster code and manual, hoping to find ways to make the music smaller. It turns out the oldskool “1 instrument, many tracks” meshes really badly with Clinkster. It includes the instrument definition once per track that uses that instrument, plus enumerates all the note/velocity/length combinations that are played in that track. So El Blanco started shuffling instruments and notes around, dropping the amount of instruments that Clinkster generated from 69 to below 30. That also cut the precalc time (more than a minute) in half. Too bad it also triggered a Clinkster bug: if you have more than 127 note/velocity/length combinations, Clinkster stops generating notes prematurely and the music sounds corrupt. Once we knew the cause the fix was easy, but it took a few days of panic before we got that far…

Despite all reordering, the music was still too big, so El Blanco started to cut down on notes, reusing some instruments and other measures that lowered the quality. Meanwhile, I used the Shader Minifier tool from Ctrl-Alt-Del. It’s an amazing tool to automatically make your shader as small AND compressible as possible (two different things). It can do things like stripping out whitespace, simplifying math formulas, rewrite small branches to avoid needing curly braces etc. But the most helpful thing it does (that would be very hard for a human to do on his/her own) is renaming the functions and variables according to the most-frequently used letter combinations. This really helps compression a LOT. The downside is that your code becomes rather unreadable. For example, if you have used sin() or max() a lot, it might decide that n() and x() are good names for functions, because the opening bracket already follows the letter n or x often. So your first function will be called n(). But, if there is another function with a different signature (number and types of arguments), that will ALSO be called n(…). Only if there is another function with the same signature will it be called x(). So plenty of your functions will have identical names.

The usual way to develop a 4K is to write readable shaders, run them through shader minifier from time to time and see how much space they take in the compiled and compressed executable. Then you continue working on the readable shaders. But that was not an option this time. To save space, I used shader stitching: instead of having 1 gigantic shader for the entire intro (bad idea, would be horrible slow) or 1 shader per scene (also bad, would take too much space), I put all functions that were used in more than 1 effect in a “prefix” part (f.e. the random number generator, rotation, 2D noise etc). For each scene, the unique distance function (the mathematical formula that raymarching intros use to determine the shape and color of an object or scene) went in a separate part. And the common render loop was put in a shared “postfix” part. The shader for a single scene thus consisted of the shared prefix, the unique distance function and the shared postfix, all stitched together. While this saved hundreds of bytes of space, it also made it impossible to use Shader minifier, who expects as single shader as input. And you can’t combine outputs, as different shaders would get different variable names in their common parts. So I let Shader Minifier run on the biggest stitched-together shader, and fixed the other shaders by hand, which was very boring and error-prone, and a big reason I hadn’t done that before. It also meant that any further development had to be done on the minified versions, unless I wanted to go through the manual fixing again….

I also ran over every shader, looking for ways to simplify distance functions or make them better compressible. For example, this was an initial attempt at the distance function for the tetrakis hexahedron (the bouncing things on the chessboard):

vec3 j; // color

float tkhh(vec3 r)

{

n(r.xy,v*100); // rotation over Z axis, v = time

n(r.yz,v*100); // same for X axis

r=abs(r); // 2-way symmetric along 3 axis

// determine color of triangle

if (r.y>r.x && r.y>r.z) // Y axis largest, up or down

if (r.x>r.z)

j=vec3(.27,.31,.6);

else

j=vec3(1,1,1);

else

if (r.z>r.x ) // Z largest, front or back

if (r.y>r.x)

j=vec3(.27,.31,.6);

else

j=vec3(1,1,1);

else // X axis largest, left or right

if (r.z>r.y)

j=vec3(.27,.31,.6);

else

j=vec3(1,1,1);

// Make hexahedron by repeated folding over various symmetry axises

vec3 n1=vec3(1,0,0);

n(n1.xz, -.7878);

r-=2.*min(0,dot(r,n1))*n1; // fold

vec3 n2=vec3(1,0,0);

n(n2.xz, .7878);

r-=2.*min(0,dot(r,n2))*n2; // fold

vec3 n3=vec3(0,1,0);

n(n3.yx, .787);

r-=2.*min(0,dot(r,n3))*n3; // fold

vec3 n4=vec3(0,0,1);

n(n4.xz, +2.3563);

r-=2.*min(0,dot(r,n4))*n4; // fold

n(r.xz, -.0085);

n(r.xy, 1.0974);

float d = r.x - +4.27; // distance to plane

return d;

}

And here is the final version:

bool j;

float h(vec3 f,float v,float y)

{

x(f.xy,v); // rotation over Z axis

x(f.yz,y); // rotation over X axis

f=abs(f); // 2-way symmetric along 3 axis

// determine color of triangle

j=f.x>f.y^^f.y>f.z^^f.z>f.x;

// Make hexahedron by malforming a cube

if(f.x>f.y)

f=f.yxz;

if(f.x>f.z)

f=f.zyx;

if(f.y>f.z)

f=f.xzy;

return .6*f.z+f.y*.4; // Z = smallest axis, Y = middle axis

}

The final version supports the two different hexahedron, so the rotation values have become parameters. The exact color of the facets and the radius are set by the calling function. the choice of “even/odd” triangles had become a single line, and instead of having a mathematically-correct tetraxis hexahedron, I just took a cube on which I deformed each face in a pyramid-like fashion. The resulting formula is not really a correct distance function (the normals on it won’t be entirely correct), but visually it’s good enough and it’s a lot smaller.

One day before the party, and with some more cuts to the music, we were sure we would have SOME 8K version to release, albeit without the voices, and some parts that were rather boring. At the partyplace, I tested the intro on the compomachine with Las/Mercury, who was extremely helpful. He agreed that, as there weren’t that many 8Ks, the timelimit could be stretched a bit, and he helped me find a quiet spot to continue coding without having to worry that people would see my screen and know what I’m working on.

I kept optimizing the shaders, and converted the intro to assembler. This was another task I had been delaying because it’s boring and error-prone. But it really was necessary, assembler gives you a far better level of control. For example, to do the shader stitching, I had two arrays: a big one with the address of every scene-specific distance function. And a small one with the address of the prefix shader, an empty space and the adress of the postfix shader. When the intro runs, every scene-specific distance function has its address copied from the big array to the middle of the small array, and the small array is compiled with an OpenGL call. Repeat for every scene. So, I expected the data segment to contain these two arrays. Nope. What Visual Studio 2013 actually creates is:

– the two arrays, in the BSS (uninitialized memory) segment

– for EVERY shader, a variable with the address of that shader

– two dynamic initializer functions, to fill the arrays with the addresses from the variables.

– and another function to call the two dynamic initializer functions.

Throwing all that junk out saved another 100 or so bytes, after compression.

– go over every constant in you code and see if you can lower the precision. Is it really necessary to define a color with 3 times two digits, or is a single digit per component enough?

– inline everything. If a sub-distance function is used only once, plunk it down right in the main distance function.

– look at the various parameters for each scene such as camera position, rotation, number of raymarch steps, strength of ambient occlusion etc and try to use the same values as much as possible

– try to make the variable usage as uniform as possible in all functions. That includes the names of variables. Sadly, Shader Minifier is not always optimal in this regard. If you have for example multiple for-loops, it pays of to have them use the same name for the control variable each time.

A big problem with late-stage optimizations is that Crinkler goes absolutely bonkers. Frequently, removing bytes actually makes your compressed executable bigger. A lot bigger (than a previously-compressed version, of course). This is understandable if you had a nice repeating pattern that you broke by accident. But that is not the only case. I noticed I had accidentally left a single tab character inside a shader. This was the only tab character, but after removing that, the exe became (in slow compression mode, with 10000 order tries and 1000 hashtries)… 75 bytes bigger. The reason for this has to do with Crinkler’s models. Crinkler can use the preceding 8 bytes to predict the next bit, but it doesn’t have to use all of them. It can choose to use only the odd or only the even bytes, for example. This choice of which bytes to use for predictions is called a model. And Crinkler can in fact combine multiple models to get better prediction rates. But this choice of models is a bit of an art, and it’s not continuous. Picking a slightly different set of models may cause a big jump in your filesize. ( More info about Crinkler can be found here: Crinkler Secrets

Notes made at the party, trying to find 200 extra bytes for the voices.

The number in brackets is Crinklers “ideal compressed size”. The number in square brackets was including the voices. Click for larger version

So basically, after some change Crinkler sometimes gets “lucky” and compresses rather better than expected. The flip side is that, if you hit such a local minimum, almost any change you do will increase the filesize, as Crinkler abandons that specific set of models. This is extremely frustrating, as any change you devise (and were certain would improve compression) actually makes the situation worse, even after lots of order tries and 15-minute compression attempts.

There are two ways around this: you can either try (and undo) sets of 2, 3, 4 or more changes, until you find one that breaks through the local minimum. This is time-consuming, of course. Or, you can simply pick the change that you are the most certain about that it *should* improve compression, apply that and compress, and use that filesize as the “new normal”, and apply the other changes to see if they lower the size. So you just accept the the lucky outlier was indeed an outlier, and ignore it. After enough improvements, you’ll get back to, and beyond, that small size.

In the end, I managed to find enough to put back in the voices, plus some improvements to the scenes. Unfortunately, when I finally handed a finished version to Las, it crashed on the compomachine… Some panicked debugging later, the culprit turned out to be … the voices, which the compo machine doesn’t support. No idea why, since SAPI has been delivered with windows since XP! Anyway, we were so close to the compo (well beyond the deadline) that I had to make a version without the voices :(

The reaction by the public was great, from the initial confusion over the 1-minute black screen to the shouting when the first recognizable scene popped up, the tension of “did they REALLY put every scene in 8K?!”, and the applause for each additional scene. I hadn’t expect the metaball-modelled images to be greeted with such hilarity, but in retrospect I see how much of a difference they are to the other effects.

After the compo we got lots of feedback on Pouet. Many people didn’t like the absence of the transitions, while the voices were not popular, or even seen as a bad point. I hadn’t planned on spending a lot of time on a final (except for fixing AMD bugs, the intro was finished on my nvidia laptop but I want to support both brands of graphic cards), but on the other hand… Second Reality is a part of our common culture, and making (yet another) remake on a specific platform makes it pretty much impossible for anyone else to do the same. There has been only 1 Real Reality, only 1 Second reality 64, etc. So, I though I owed it to the other people who have had the same idea (Hi Blueberry!) to at least try to improve it as much as possible. So I replaced the 200 bytes of the voices with most of the missing transitions, such as the dropping “get down” bars, fade-in and fadeouts etc. It’s still not perfect, but I think the result is indeed superior, and I regret that I couldn’t show this version at the party.

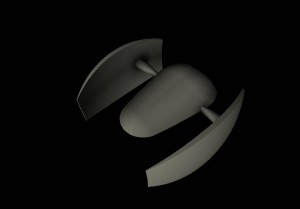

The ship model, which stayed the same except that all parameters were rounded to 1 or 2 significant digits.

All in all, it was a lot of fun and a lot of stress to make this, and we’re quite happy with how it turned out.